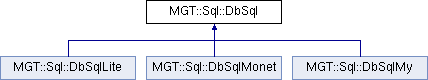

Inheritance diagram for MGT::Sql::DbSql:

Public Member Functions | |

| def | getTableDescr |

| def | selectAll |

| def | selectScalar |

| def | selectAsNx1Dict |

| def | selectAs1Col |

| def | dropIndex |

| def | createIndices |

| def | createTableAs |

| def | saveRecords |

| def | createTableFromArray |

| def | createTableFromCsv |

| def | selectAsArray |

| def | exportAsCsv |

Static Public Attributes | |

| tuple | defField = SqlField(name="fld",type="char(40)") |

| Default SQL field definition - a fall-back field type to create when nothing more specific is provided by the user. | |

Detailed Description

Wrapper around DB-API Connection class with some convenience methods. @todo Convert this to using SQL Alchemy. SQL Alchemy imposed too big abstraction penalty in the past, but this might not be the case anymore assuming carefully following its best use practices. We will still likely to need our bulk loading methods.

Member Function Documentation

| def MGT::Sql::DbSql::createIndices | ( | self, | |

| names, | |||

| table | |||

| ) |

We always create and drop indices as tablename_indexname because in some DBMS (MonetDB) index names should be globally unique. This can be specialized for MySQL and others that support ALTER TABLE ... ADD INDEX ... ADD INDEX

Reimplemented in MGT::Sql::DbSqlMonet.

| def MGT::Sql::DbSql::createTableAs | ( | self, | |

| name, | |||

| select, | |||

indices = None |

|||

| ) |

Save the results of SQL SELECT as a new table. This abstracts "create ... as ..." operation from minor differences in SQL dialects. For example, MonetDB Feb2008 required 'with data' at the end, MySQL 5 and SQLite do not recognize 'with data' Override in derived classes if necessary. @param name name of table to (re-)create - existing table will be replaced @param select SQL select statement @param indices if present, will be passed to createIndices() method

Reimplemented in MGT::Sql::DbSqlMonet.

| def MGT::Sql::DbSql::createTableFromArray | ( | self, | |

| name, | |||

| arr, | |||

withData = True, |

|||

returnInserter = False, |

|||

indices = None |

|||

| ) |

Create a table that reflects the fields of Numpy record array. @return BulkInserter object or None @param name The name of the new table @param arr Numpy array to use as template @param withData if True, also load data from array @param returnInserter if True, return a BulkInserter object; The caller then is resposible for closing the inserter object. @param indices, if not None, should be a dictionary with arguments to createIndices, except the 'table' argument, which will be taken from 'name'. All fields are constrained as NOT NULL, as Numpy does not have NULL values, and NOT NULL constraint speeds up queries.

| def MGT::Sql::DbSql::createTableFromCsv | ( | self, | |

| name, | |||

| csvFile, | |||

fieldsMap = {}, |

|||

defField = None, |

|||

hasHeader = False, |

|||

dialect = "excel-tab", |

|||

dialect_options = {}, |

|||

indices = None, |

|||

preProc = None |

|||

| ) |

Create and fill a table from CSV file. The intention is to provide a single command to load a CSV file into SQL table where reasonable default values can be generated for all options. @param name The name of the new table @param csv Either a file name, in which case csv.reader(openCompresed(),dialect=dialect) will be used to open the file, or it should be an existing file stream, or csv.reader object (in the latter case dialect and dialect_options parameters are ignored). @param fieldsMap A dictionary that either maps field position to SqlField instances (if hasHeader is False) or maps field names to SqlField instances (if hasHeader is True). In the latter case, each SqlField instance can replace the name from the header, or leave the name as None, in which case the name supplied by the header will be used. @param defField SqlField instance to generate default SQL definitions for fields not mapped by fieldsMap. If hasHeader is False, defField.name will be used as a prefix, such that the field name becomes prefix_xxx where xxx is the absolute field position in CSV row. Otherwise, defField.name is ignored, and only the other attributes are used to define default field type etc. @param hasHeader tells whether to treat the first row of CSV file as header @param dialect Dialect string defined in csv module @param dialect_options Passed to csv.reader(**dialect_options) @param indices, if not None, should be a dictionary with arguments to createIndices, except the 'table' argument, which will be taken from 'name' @param preProc If specified, this should be a method that will be applied to each row returned by csv.reader. The method must return a sequence (possibly empty) of new rows, which will be inserted into SQL table instead of the original row. Their size and types must match the original row. The preProc must have this signature: preProc(row,fields,nameToInd) where row is returned by csv.reader.next(); fields is a list of SqlField objects matching the row fields; nameToInd is a dictionary mapping field names to indexes of fields in the row. The last two parameters allow preProc's code to access row elements by field names, e.g. row[nameToInd["seqid"]]. The preProc parameter addresses a common use case where the input file is very large but we need to load into the SQL DB only a small subset of it for which a simple filter condition exists such as set membership. It also covers simple manipulation of input data such as various string substitutions. Example: preProc = lambda row,fields,nameToInd,idSet=set(1,2,3): \ ( row, ) if row[namesToInd["seqId"]] in idSet else (,) createTableFromCsv(...,preProc=preProc)

| def MGT::Sql::DbSql::dropIndex | ( | self, | |

| name, | |||

| table, | |||

rawName = False |

|||

| ) |

We always create and drop indices as tablename_indexname because in some DBMS (MonetDB) index names should be globally unique.

Reimplemented in MGT::Sql::DbSqlMonet.

| def MGT::Sql::DbSql::exportAsCsv | ( | self, | |

| sql, | |||

| out, | |||

withHeader = True, |

|||

bufLen = 100000, |

|||

dialect = "excel-tab", |

|||

dialect_options = {"lineterminator":"\n"}, |

|||

comment = None, |

|||

sqlAsComment = False, |

|||

commentEscape = '#', |

|||

epilog = None |

|||

| ) |

Excecute SQL and export the result as CSV file. @param sql SQL select statement to export results of @param out Either file name, or file stream object, or CSV writer object @param withHeader If True, write the field names as the header @param bufLen Size (in number of records) of the internal memory buffer used when moving SQL result set into the output file @param dialect Dialect string defined in csv module @param dialect_options Passed to csv.writer(**dialect_options) @param comment if not None, this string will be printed at the top @param sqlAsComment if True, will print sql statement as an extra comment line @param commentEscape this string will be inserted at the start of every comment line @note To output any comments, out should not be a csv.writer instance @note We set the default lineterminator to Linux style '\n', as opposed to Python's default of Windows style '\r\n'

| def MGT::Sql::DbSql::getTableDescr | ( | self, | |

| name | |||

| ) |

Return table description as seen by DB API module

| def MGT::Sql::DbSql::saveRecords | ( | self, | |

| records, | |||

| table | |||

| ) |

Create a new table and save records into it. @param records - iterable with each element been a sequence of field values itself @param table - instance of SqlTable description class

| def MGT::Sql::DbSql::selectAll | ( | self, | |

| sql, | |||

| kw | |||

| ) |

Convenience method that does for select statement and execute+fetchall in one step. Use for statements with small result set. @param sql SQL SELECT statement @return result of cursor.fetchall (sequence of tuples)

| def MGT::Sql::DbSql::selectAs1Col | ( | self, | |

| sql, | |||

| kw | |||

| ) |

Execute sql that must return one column and return result as 1D sequence.

| def MGT::Sql::DbSql::selectAsArray | ( | self, | |

| sql | |||

| ) |

Execute SQL select and return the entire result set as Numpy record array.

| def MGT::Sql::DbSql::selectAsNx1Dict | ( | self, | |

| sql, | |||

| kw | |||

| ) |

Execute sql that must return two columns with Nx1 relation and return result as dict(first->second).

| def MGT::Sql::DbSql::selectScalar | ( | self, | |

| sql, | |||

| kw | |||

| ) |

Execute sql that must return a single row with a single column and return result as scalar value.

The documentation for this class was generated from the following file:

- mgtaxa/MGT/Sql.py

1.7.2

1.7.2