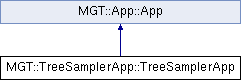

Inheritance diagram for MGT::TreeSamplerApp::TreeSamplerApp:

Public Member Functions | |

| def | __init__ |

| def | initWork |

| def | getTableName |

| def | getFilePath |

| def | doWork |

| def | mkDbSampleCounts |

| def | loadSampleCountsMem |

| def | setIsUnderUnclassMem |

| def | markTrainable |

| def | unused_mkDbTestingTaxa |

| def | unused_lowRankTestPairs |

| def | unused_sampleTestPairs |

| def | isTestTerm |

| def | selectTestTaxa |

| def | markTraining |

| def | checkPostMarkTraining |

| def | statTestTaxa |

| def | writeTraining |

| def | pickRandomSampleCounts |

| def | writeTesting |

Public Attributes | |

| idLabRecs | |

| Try to allocate enough idLab records for both training and testing, but wrap it in ArrayAppender just in case we reach the end at some point. | |

| labToName | |

| labToName items that are always present, others will be added later - "rj" reject group, always 0; "bg" background samples, always 1 | |

| sampNTrainSelf | |

Static Public Attributes | |

| string | termTopRankSeq = "_sequence" |

| Special constant to control splitting - will cause splitting along nodes with directly attached sequence. | |

| string | termTopRankLeaf = "_leaf" |

| Special constant to control splitting - will cause splitting along leaf nodes. | |

Detailed Description

Sample chunks of available training sequence by concatenating all sequences for each taxid. Concatenating (as opposed to chunking each sequence individually) greatly simplifies random sampling of taxid chunks w/o replacement and increases the amount of sample data for small sequences. It creates chimeric k-mer vectors however (but not chimeric k-mers because we insert spacers).

Constructor & Destructor Documentation

| def MGT::TreeSamplerApp::TreeSamplerApp::__init__ | ( | self, | |

| args, | |||

| opt | |||

| ) |

Constructor.

@param args optional command line arguments to parse -

if executing as a module, should pass None or sys.argv[1:], else - [], which should result in

default values generated for all options not defined by opt.

@param opt Struct instance - values defined here will override those parsed from args.

Two basic use patterns:

if running as a shell script and parsing actual command line arguments:

app = App(args=None)

app.run()

if calling from Python code:

construct opt as a Struct() instance, specify only attributes with non-default values, then

app = App(opt=opt) #that fills yet unset options with defaults

app.run() #either runs in the same process, or submits itself or a set of other Apps to batch queue

Reimplemented from MGT::App::App.

Member Function Documentation

| def MGT::TreeSamplerApp::TreeSamplerApp::checkPostMarkTraining | ( | self ) |

Consistency check after a call to markTraining().

| def MGT::TreeSamplerApp::TreeSamplerApp::doWork | ( | self, | |

| kw | |||

| ) |

Do the actual work. Must be redefined in the derived classes. Should not be called directly by the user except from doWork() in a derived class. Should work with empty keyword dict, using only self.opt. If doing batch submision of other App instances, must return a list of sink (final) BatchJob objects.

Reimplemented from MGT::App::App.

| def MGT::TreeSamplerApp::TreeSamplerApp::getFilePath | ( | self, | |

| name | |||

| ) |

Same as getTableName() but for files.

| def MGT::TreeSamplerApp::TreeSamplerApp::getTableName | ( | self, | |

| name | |||

| ) |

Given a stem table name, return full table name unique for this object (prefix+name). @param name - stem name

| def MGT::TreeSamplerApp::TreeSamplerApp::initWork | ( | self, | |

| kw | |||

| ) |

Perform common initialization right before doing the actual work in doWork(). Must be redefined in the derived classes. Should not be called directly by the user except from initWork() in a derived class. This one can create large objects because they are not passed through the batch submission, but immediately used within the same process.

Reimplemented from MGT::App::App.

| def MGT::TreeSamplerApp::TreeSamplerApp::isTestTerm | ( | self, | |

| node | |||

| ) |

Return True if the node is designated as testing terminal. If True, the node and its subtree can be used only in its entirety either for training or for testing split. Currently the implementation of this methods checks if node's rank is present in self.opt.termTopRanks, with the two special names allowed in self.opt.termTopRanks: 1) when termTopRanks list contains element equal to self.termTopRankSeq constant, we return True for every node that has directly attached sequence. 2) when termTopRanks list contains element equal to self.termTopRankLeaf constant, we return True for every leaf node (without children). The typical use to have either actual ranks in self.opt.termTopRanks, or either one of the special constants. Redefine this method in derived classes to use some more complicated splitting criterion. For the default implementation, if for example, we return True for species nodes, then sequences under species will be never split between training and testing. That ensures proper validation of a claim that testing accuracy is provided for the dicovery of previously unseen species. @note PhyloPythia 2006 paper used splits as the level of strains (or whatever the leaf sequence taxonomy node was for). @attention It is often the case that a species node will have directly attached sequence, while also having strains under it with their own sequence. Most of the time, the species level sequence is not genome-wide sequencing output, but rather sequences for some specific genes. There examples when it is a full plasmid sequence, e.g. Aeromonas salmonicida taxid 645. If we ignore in sample database construction everything but WGS and full genomes, and drop plasmids, we are probably safe. However, dealing with it in a general case probably makes sense if just pick leaf nodes as testing terminal and ignore any sequence attached to their upper nodes.

| def MGT::TreeSamplerApp::TreeSamplerApp::loadSampleCountsMem | ( | self ) |

Transfer sample counts from DB into in-memory taxonomy tree object.

| def MGT::TreeSamplerApp::TreeSamplerApp::markTrainable | ( | self ) |

Mark all nodes that have enough samples for training with and without validation.

When we train for subsequent validation with testing set, we cannot use any 'unclassified'

samples because they might actually represent the excluded testing set. On the other

hand, there is no reason not to use any 'unclassified' samples under a 'classified'

node for the final re-training.

@pre loadSampleCountsMem() and setInUnderUnclassMem() were called

@post in-memory taxaTree nodes have the following attributes set:

isTrainable - trainable with 'unclassifed' samples included

isTrainableTest - trainable for validation. A node can still be excluded from

testing later if extracting testing set will leave too few samples for training,

but it will be trained during testing stage - just will not have any true positive

testing samples.| def MGT::TreeSamplerApp::TreeSamplerApp::markTraining | ( | self ) |

Mark final training state for each node - 'is_class' and 'n_mclass' attributes. Nodes that are not 'is_class' are ignored during training. 'is_class' means that a node can be used as a label in training the multiclass classifier for the parent node. 'n_mclass' is also set to the number of classes below the node. 'samp_n_max' is the maximum leaf sample count under this node. This method must be called after selectTestTaxa() and uses node attributes set by that method.

| def MGT::TreeSamplerApp::TreeSamplerApp::mkDbSampleCounts | ( | self ) |

Make DB tables with sample counts per taxonomy id

| def MGT::TreeSamplerApp::TreeSamplerApp::pickRandomSampleCounts | ( | self, | |

| node | |||

| ) |

Randomly pick counts of available samples from taxonomy ids below the node.

@return dictionary { taxid -> count }.

Should be called from writeTraining() which sets up sampNTrainTot and sampNTrainSelf

node property arrays.| def MGT::TreeSamplerApp::TreeSamplerApp::selectTestTaxa | ( | self ) |

Select nodes that will be used entirely for testing.

The method aims to achieve these goals:

- create a uniform random sampling of the tree

- sample as deep into the hierarchy as possible

- select entire genera or entire species if there is no genera above them

(we call such nodes "terminal" here)

This is done recursively: for a given node:

a) all taxa selected as testing for its non-terminal children is also selected

b) and then terminal children are added at random until we reach the limit on

testing samples set for this node.

The major alternative would be to do the (b) selection from a list of all

so far non-selected terminal subnodes in an entire sub-tree of a given node

(the method would have to return such a list from each recursive call, with the

parent concatenating the lists). This would provided better chances to reach the

testing ratio target for higher order taxa. However, this would also run a danger

of a non-uniform training and deteriorating perfomance. For example, if we have

a subtree (A,(B,C),D) and B was selected as testing for (B,C) subtree, we could also

select C as testing for (A,(B,C),D) subtree (it would still be used as training

for (B,C). However, we would have no trainig samples from (B,C) when trainig (A,(B,C),D),

which can become an issue.

@post Attributes are set for each node:

- is_test - if True, entire subtree is selected as testing sample. Set for every node

in such a subtree.

- has_test - if True, node has enough testing samples, but it is not 'is_test' itself.

- samp_n_tot_test - number of testing samples for this node

- samp_n_tot_train - number of training samples for this node.

- isTestTerm - split boundaries (entire subtrees can have either True or False)

Note that the presence of "unclassified" sequence still creates problems:

selection of even one testing sample excludes from training all unclassified nodes

which are immediate children of all nodes in testing sample lineage. Thus, any of these nodes

can become non-trainable even if it is trainable w/o testing. However, we need to accumulate

the testing sequence for use by upper nodes, so this seems unavoidable. To check the effect

of this tradeoff, temporarily make TaxaNode.isUnclassified() to always return False and re-run

the testing/training selection. So far this experiment shown little impact.| def MGT::TreeSamplerApp::TreeSamplerApp::setIsUnderUnclassMem | ( | self ) |

Set an attribute 'isUnderUnclass' for in-memory tree nodes. It flags all nodes that have "unclassified" super-node somewhere in their lineage.

| def MGT::TreeSamplerApp::TreeSamplerApp::statTestTaxa | ( | self ) |

Save SQL statistics about testing/training set selection. Call after markTraining()

| def MGT::TreeSamplerApp::TreeSamplerApp::unused_lowRankTestPairs | ( | self, | |

| rank, | |||

| superRank | |||

| ) |

Return set of unique pairs (testing node,first supernode). @param rank - entire nodes of this rank will be selected as testing samples (e.g. genus). @param superRank - a second member of each pair will have at least this rank (e.g. family). 'superRank' nodes might not be 'isTrainable'. That should be filtered later.

| def MGT::TreeSamplerApp::TreeSamplerApp::unused_mkDbTestingTaxa | ( | self, | |

rank = "genus", |

|||

superRank = "family" |

|||

| ) |

Select complete 'rank' nodes uniformly distributed across 'superRank' super-nodes.

| def MGT::TreeSamplerApp::TreeSamplerApp::unused_sampleTestPairs | ( | self, | |

| pairs | |||

| ) |

Randomly sample a list of (testing node,first supernode) pairs obtained with lowRankTestPairs().

| def MGT::TreeSamplerApp::TreeSamplerApp::writeTesting | ( | self, | |

| svmWriter | |||

| ) |

Write testing data set. @pre Nodes have the following attributes: is_class - True for nodes that can serve as classes in SVM n_mclass - number of trainable sub-classes samp_n_tot_test - number of testing samples in a subtree is_test - True if a subtree is used for testing This method must be called after markTraining() sets needed node attributes.

| def MGT::TreeSamplerApp::TreeSamplerApp::writeTraining | ( | self, | |

| trNodes, | |||

| svmWriter | |||

| ) |

Write training data set for each eligible node. @pre Nodes have the following attributes: is_class - True for nodes that can serve as classes in SVM n_mclass - number of trainable sub-classes samp_n_tot_test - number of testing samples in a subtree samp_n_tot_train - number of training samples in a subtree (excluding testing) is_test - True if a subtree is used for testing @post Data sets ready for feature generation are saved. @post taxaTree.getRootNode().setIndex() resets internal node index. This method must be called after markTraining() sets needed node attributes.

Member Data Documentation

- Todo:

- make conditional on not is_test

The documentation for this class was generated from the following file:

- mgtaxa/MGT/TreeSamplerApp.py

1.7.2

1.7.2